When AI Forgets: Understanding and Fighting Context Rot in Large Language Models

- Dec 23, 2025

- 4 min read

Generative AI feels like magic — answer long questions, maintain conversations, write essays, and synthesize large knowledge bases. But beneath the impressive facade lies a subtle and growing vulnerability: Context Rot.

Context Rot is the surprising phenomenon where adding more information actually makes AI models worse — not better. The more you feed these systems, the more they struggle to retrieve the right details, reason accurately, or stay coherent. This poses challenges for everything from customer support bots to coding assistants.

Let’s unpack what Context Rot is, why it happens, and how we can fight back.

📉 What Is Context Rot?

At its core, Context Rot refers to the degradation in output quality when an AI model processes very long or complex input context. Intuitively, you’d think that giving a model more data would help; instead, it often harms performance:

AI outputs become more inaccurate

Relevance decreases as important facts get “buried” amid noise

Models hallucinate or contradict themselves

Even simple questions get answered incorrectly

Think of trying to recall a fact after someone reads you hundreds of pages; as the story drags on, your memory of the earlier parts fades. AI models face a similar struggle.

📊 The Data: When More Is Less

The intuition sounds logical: more context should equal better answers. But real-world benchmarks tell a very different story.

Across multiple evaluations — especially “Needle in a Haystack” tests — large language models exhibit a distinctive U-shaped performance curve.

🔍 What Are “Needle in a Haystack” Tests?

These tests insert a small, critical fact (the needle) somewhere inside an extremely large body of irrelevant or semi-relevant text (the haystack). The model is then asked to retrieve or reason using that fact.

In theory, models with massive context windows should excel.

In practice, they don’t.

📉 The U-Shaped Curve Explained

Instead of steadily improving with more context, model accuracy typically follows this pattern:

High accuracy → drops sharply → partially recovers → drops again

Why this happens:

Short Context (Too Little Information)

Model lacks enough background

Accuracy is limited but predictable

Medium Context (Optimal Zone)

Key facts are visible

Attention is focused

Reasoning is strongest

Long Context (Context Rot Zone)

Signal is drowned in noise

Attention becomes diluted

Retrieval and reasoning degrade

📌 Key Insight:Even when a model supports 100k–1M tokens, its effective context — where it actually reasons reliably — is often 10–20× smaller.

🔍 Why Bigger Isn’t Always Better

Most people assume that a model with a bigger context window is smarter. But real tests show a different pattern:

More tokens → slower responses

More unrelated data → higher hallucinations

Long contexts often hide the most relevant facts

Models lose ability to reason coherently

This holds even with advanced models claiming million-token contexts. The promise of “infinite memory” hasn’t solved the core challenge: relevant memory prioritization, not just memory size.

⚙️ The Mechanics of Forgetting

Why does context rot happen at a technical level? The answer lies deep inside the Key–Value (KV) cache and the attention matrix that power transformer models.

In a transformer, every token effectively “looks at” every other token to decide what matters. This interaction scales as O(n²). As context grows, attention doesn’t just expand — it explodes.

When a 128k context window is filled, the model isn’t merely reading more text. It is forced to maintain quadratic relationships among thousands of mostly irrelevant tokens. Signal gets diluted, attention fragments, and reasoning weakens.

This leads to what researchers describe as the Mid-Context Valley — a zone where information placed neither at the beginning nor the end of a long prompt becomes the least retrievable. The model technically sees the data, but practically forgets it.

🧠 When AI “Forgets” What Matters

Imagine giving an AI a 100,000-token codebase and asking it to fix a specific bug — the model might miss the crucial function entirely because it got overwhelmed by distractions. This is precisely what context rot can do in real systems.

You don’t get smarter outputs just by piling on data; you get dumber results with more noise, especially on tasks requiring deep reasoning or specific recall.

💡 How to Defeat Context Rot

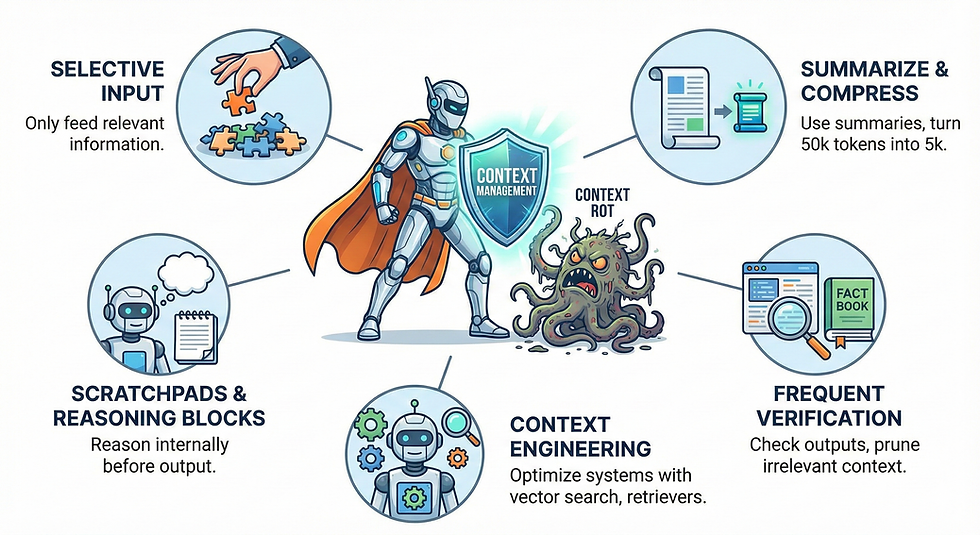

Context Rot isn’t an unsolvable puzzle — it’s a context management problem. Here are practical strategies to mitigate it:

🧩 1. Selective Input

Only feed the most relevant pieces of information — not entire histories or whole documents.

📝 2. Summarize and Compress

Use summaries instead of raw text. An AI can turn 50k tokens into 5k highly compact tokens.

📌 3. Scratchpads and Reasoning Blocks

Let the model first reason internally (in a separate workspace) before producing outputs.

🔍 4. Frequent Verification

Constantly check outputs against known facts and prune outdated or irrelevant context.

🤖 5. Context Engineering

Design systems that optimize what the model sees instead of blanketing it with everything. This includes tools like vector search, retrievers, and dynamic memory pruning.

🧠 The Future: Smarter Memory, Not Bigger Memory

To truly defeat context rot, research must pivot from simply enlarging memory windows to building memory architectures that enable:

Selective attention

Efficient recall

Context relevance ranking

Adaptive forgetting

This is similar to how humans remember — not by storing every detail, but by keeping what matters and letting the rest fade.

In other words: Smarter memory beats bigger memory.

📌 Wrap-Up

Context Rot is one of AI’s most stealthy challenges. It reveals a fragile truth: more data can make AI less reliable. But with smart design — context engineering, pruning, summaries, and verification — we can tame the rot and unlock more accurate, dependable AI systems.

Comments